Products & Services

Koha is the first free and open source library automation package. Equinox’s team includes some of Koha’s core developers.

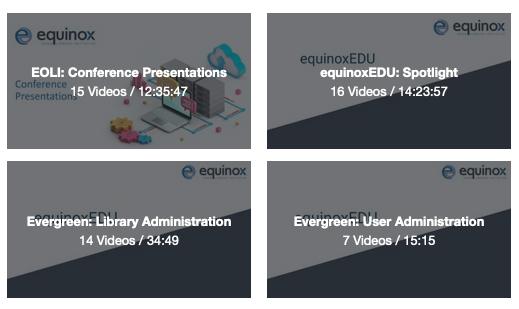

Evergreen is a unique and powerful open source ILS designed to support large, dispersed, and multi-tiered library networks.

VuFind® enables library users to discover all of your library’s collections, including catalog records, journals, digital items, and more.

Aspen Discovery provides out-of-the box discovery that integrates with your library’s electronic content providers.

Fulfillment is an open source interlibrary loan management system. Fulfillment can be used alongside or in connection with any integrated library system.

CORAL is an open source electronic resources management system. Its interoperable modules allow libraries to streamline their management of electronic resources.

SubjectsPlus is a user-friendly, open source, content management system for information sharing. SubjectsPlus gives library staff an easy-to-use interface for creating web content.

Customized For Your Library

Why Choose Equinox?

- Equinox is different from most ILS providers. As a non-profit organization, our guiding principle is to provide a transparent, open software development process, and we release all code developed to publicly available repositories.

- Equinox is experienced with serving libraries of all types in the United States and internationally. We’ve supported and migrated libraries of all sizes, from single library sites to full statewide implementations.

- Equinox is technically proficient, with skilled project managers, software developers, and data services staff ready to assist you. We’ve helped libraries automating for the first time and those migrating from legacy ILS systems.

- Equinox knows libraries. More than fifty percent of our team are professional librarians with direct experience working in academic, government, public and special libraries. We understand the context and ecosystem of library software.

Sign up today for news & updates!

We are excited about the quality of access and services we are able to deliver with Koha alongside support by Equinox.

We appreciated the partnership with the team at Equinox as they took time to learn our unique items and problem solve with us through our specific complexities. The solution focused attitude of the team has been wonderful.